To make the most of this guide, you’ll need a baseline understanding of key concepts like events, charts, and properties. If you are still learning about Heap (meaning those terms don’t mean anything to you), we recommend taking our Hello Heap course or reviewing our Setting Up Heap guide prior to jumping into this guide.

Introduction

We all know that measuring usage in a meaningful way can be a challenge. Businesses make changes they think will improve the customer experience, but often fall short when it comes to testing the impact of those changes on the user experience.

Testing theories before putting a change into practice is an important part of improving adoption and ultimately retaining customers.

If you’re new to analysis in Heap, we recommend reviewing Create Your First Chart, which covers helpful charts 101 info.

Step 1: Identify a Hypothesis to Test

Testing a hypothesis can be useful for any stage of the customer lifecycle! Improve acquisition, adoption, or retention by leveraging A/B variations.

Determine which usage event you would like to improve usage of. A usage event is an action that indicates a customer is engaging with some feature on your site or app. Is this a button click, page view, or series of actions? Whatever the case is, this event(s) will have the A/B variation associated with it!

Variations can be testing the color of a button, the location of the button, the wording on the button, the time in which the button is available to a user, and more.

If you have multiple hypotheses, we recommend running one experiment at a time. Experiments typically should be run for a minimum of 3 months for meaningful data collection. Running a single experiment at a time allows you to properly analyze your hypothesis.

Step 2: Add Test Variations to Heap via Properties

A/B variations will be a property associated with the event you are experimenting with. There are 3 ways experiment data can be added to Heap:

- Via direct integration

- Via Snapshots

- Via Heap’s addEventProperties API

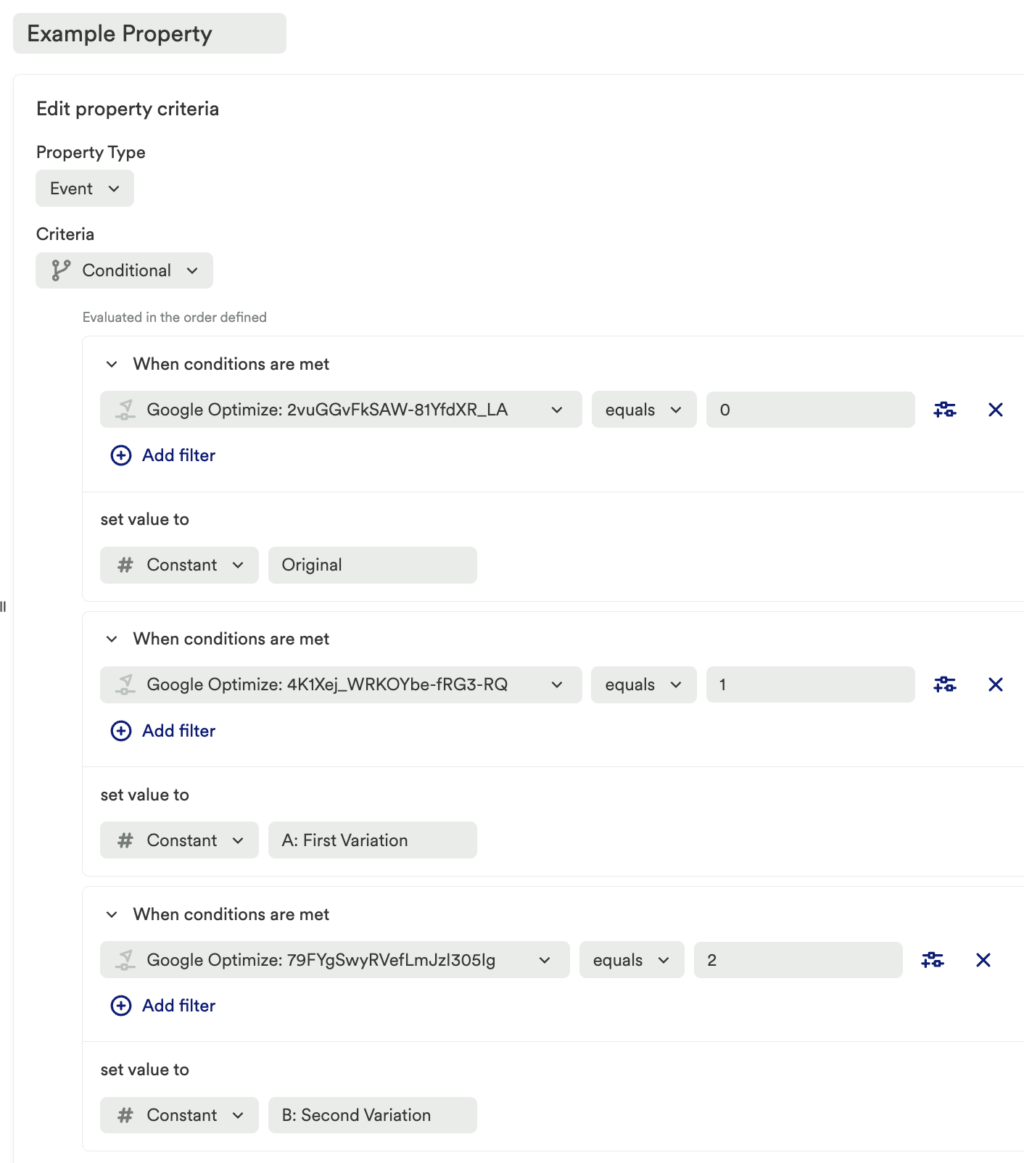

Below is an example of a built-out property using an A/B variation brought in via the Google Optimize integration:

We strongly recommend QA’ing your newly added property variations before moving forward with your experiment. To ensure your variant properties are correctly firing with their dedicated event, use Heap’s Live view to QA.

Step 3: Analyze your Variations

Once you have the experiment variations attached to the event(s) you wish to test, you can begin to analyze how the experiment affects your user behavior.

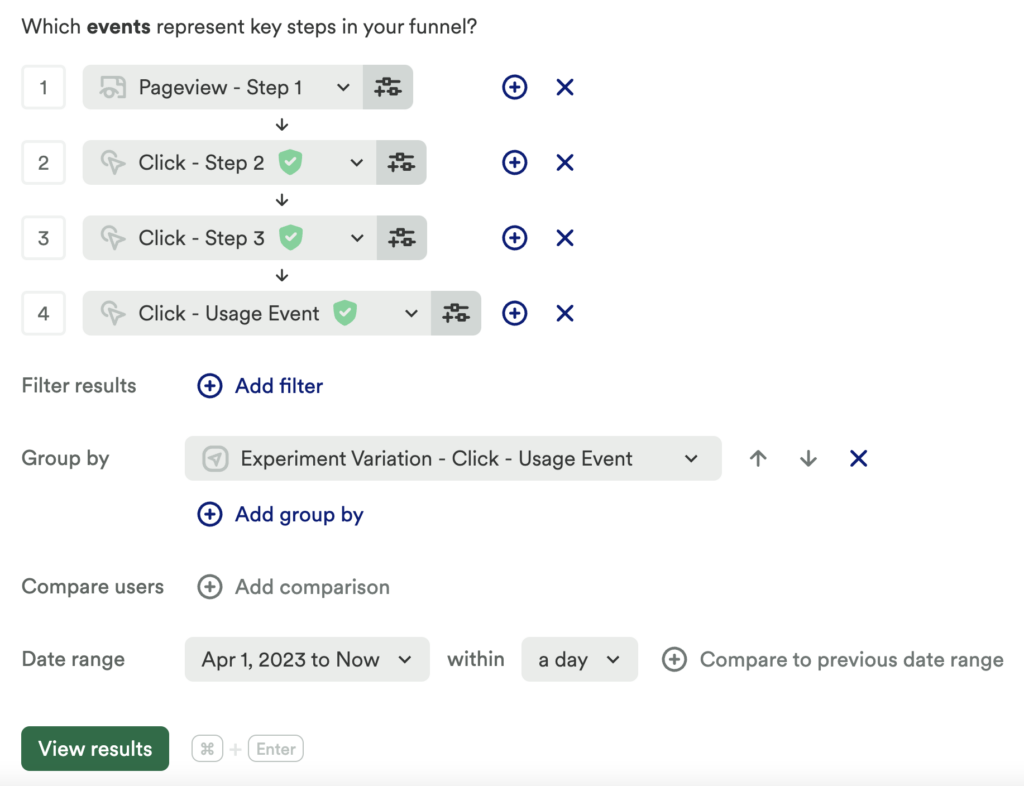

Chart 1: Baseline chart: What is the conversion rate of your event pre experiment?

To set up this baseline chart, create a funnel chart and add appropriate events, including:

- One Pageview event where your experiment is, or will be located

- One Usage event that will ultimately indicate if someone successfully interacts with your experiment

For the date range, select Custom Date Range to analyze a period before the experiment was live.

What does this tell you?

To properly understand if your experiment has had any impact, you will need this baseline chart to compare subsequent charts to.

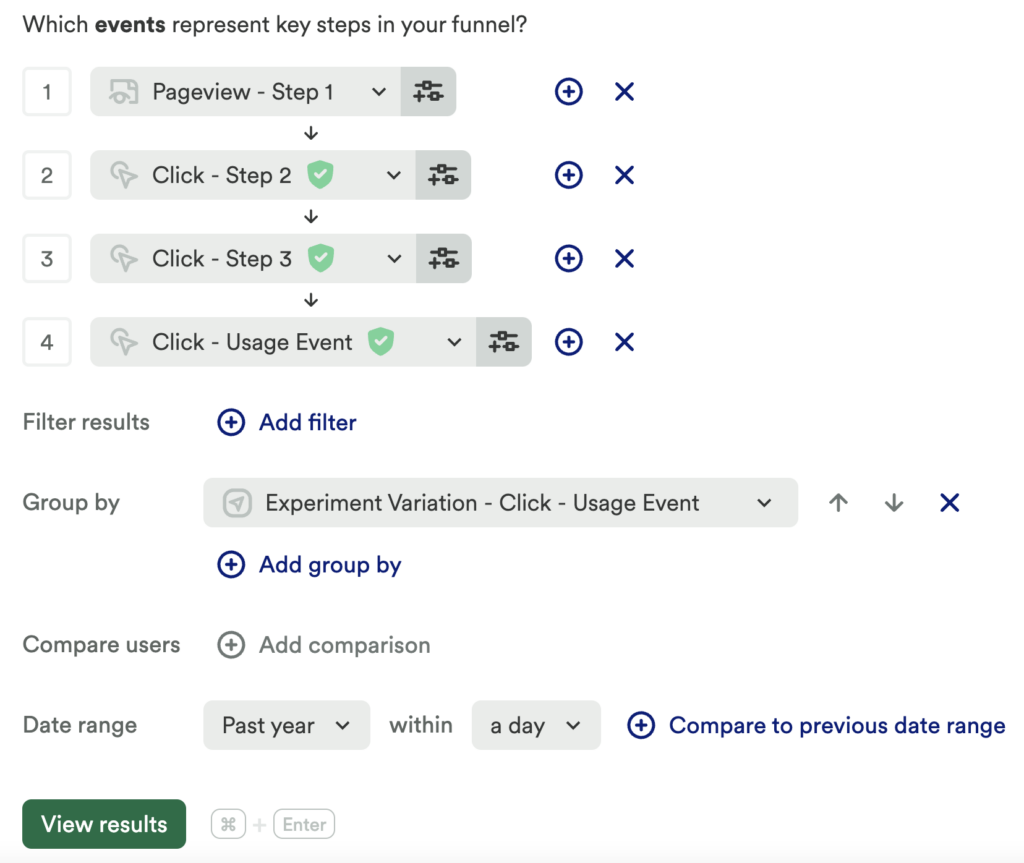

Chart 2: How does experiment affect usage goal?

For this chart, use the chart template How does my experiment affect conversion rate? and use the same events you used in chart 1. Add a group by to include the experiment property variations you created in Step 2.

For the date range, select Custom Date Range to analyze a period before the experiment was live.

What does this tell you?

Understand your conversion rate percentage. This allows you to understand which variation has had the greatest impact, if any, on your user behavior. If conversion has improved from your original state, you can continue analyzing your variation before implementing changes. If Conversion has not improved, then you will want to create a new hypothesis to test.

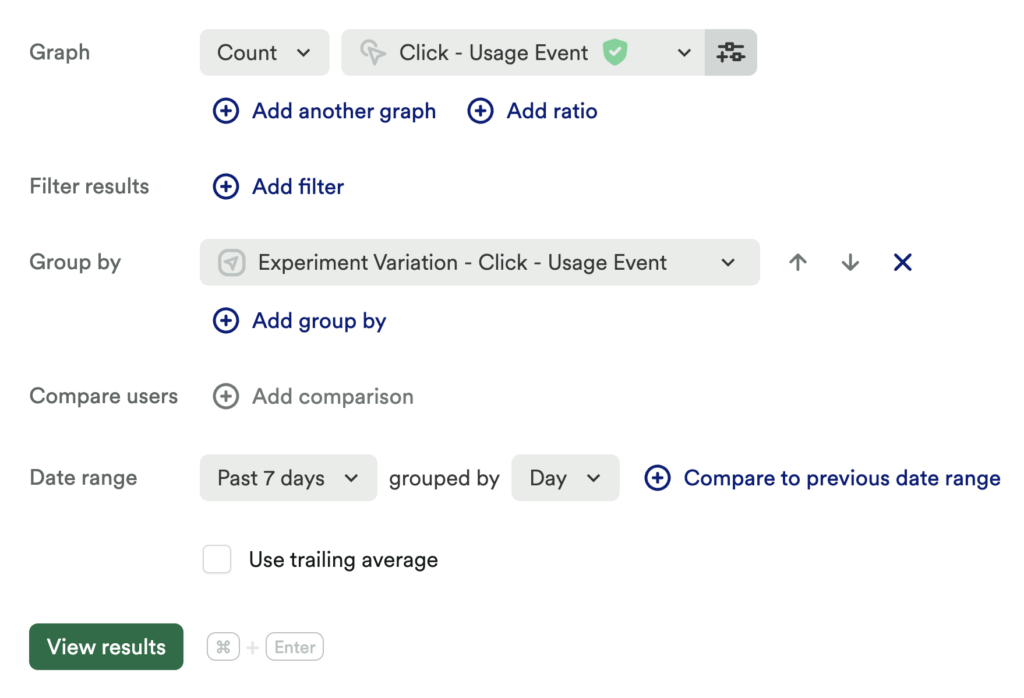

Chart 3: Compare usage counts

Set up a usage over time chart to measure the count of your usage event. Add a group by to include the experiment property variations you created in Step 2.

What does this tell you?

Directly compare usage counts of your goal event outside of a funnel to understand overall engagement.

Compare relevant segments to see if your experiment has has any impact on a particular subset of users.

Step 4: Interpret your results and take action

Once you have proven (or disproven) your hypothesis, you can use the insights you’ve found to take action and make appropriate changes.

For example, you might use this data as leverage to ask for more feature-specific resources or budget. Alternatively, you might roll out a similar experiment to determine if any other features could benefit from the same treatment.

Disproving your hypothesis is also useful. If you disprove your hypothesis you know that your base assumption was not true. So you iterate and find a new hypothesis, and run the above steps again.

Conclusion

Experiments are a great tool that allow you to understand how your users will best interact with your tool. Use this data correctly to implement product improvements that will make your user’s lives easier, while increasing your acquisition, adoption and retention.

Proving or disproving your hypothesis does not mean the work stops there! Continue iterating and continue to run experiments to create a reliable product and delightful customer experience. Even highly used features can do with an upgrade from time to time!